Update:

When this post came out, a few zealous RETE proponents had a field day bashing some of the ideas I presented here and referring to my dismissal of RETE (as a panacea for policy control) as "mental laziness". Since then, other people have come to similar conclusions, including Martin Fowler. In his article RulesEngine he states, "there's a lot to be said for avoiding more general rules systems", and advocates building simple custom, domain-specific rules systems. I happen to agree with Martin Fowler on this point.

Here is how to build a high-performance rules-based policy server in less than two hours using proven, free open-source software that can outperform incumbent rules-engine products by up to two orders of magnitude in common scenarios of business logic and policy. In addition it is easier to use and more secure than its commercial counterparts.

All of the information in this article is an established part of what we in the engineering field refer to as "the literature" - the large base of common knowledge encompassed by published resources - and is taken from free, publicly available sources. Links to online references are included.

Rules engines are found in various forms and may be alternately referred to as an

expert system, business process management system, a policy decision function, policy manager, or business rules engine. These network components make decisions based on rules that are programmed by administrators. Rules engines are all over the place. Examples include Service Oriented Architectures (SOA), workflow management systems, and logical components of many large scale Internet applications. They're used in the financial industry, where they decide such things as whether or not you qualify for a new credit card, or what your maximum mortgage should be. They are also an important part of Internet and telecommunications applications.

Many kinds of applications don't require transactions to be processed in real-time. It doesn't matter if there's some latency on the request, or in some cases, if it takes hours to generate a report. But high-traffic Internet and telecommunications applications need to support millions of users and thousands of transactions per second with a turn-around time measured in milliseconds.

A friend of mine described rules engines as "web-time" applications at best, where page-load times of several seconds are typical. They tend not to scale well to multiple thousands of transactions per second with milliseconds latency. Trying to use such a system to provide such functionality may be a case of using the wrong tool for the job.

Certain rules engines claim to be far better at processing rule sets than naive implementations, by using a

RETE algorithm. RETE is an development of the branch of computer science known as Artificial Intelligence. It was originally developed as a solution for processing the very large sets of facts and rules generated in machine-learning algorithms and is used especially in forward-chaining inference engines.

Let's summarize what RETE advocates mean by a naive implementation and why they claim to be "often several orders of magnitude better". In part, it relates to a simplified logical structure, illustrated in the following example:

Example 1:

IF A and B do X

ELSE IF A and C do Y

Example 2:

IF A {

IF B do X

ELSE IF C do Y

}

The second example avoids double-checking condition "A". Likewise, a RETE algorithm takes a collection of rules and builds a network of nodes that eliminates redundancy in the logic. It also remembers the results of evaluating criteria, and allows them to be re-evaluated if the facts change. Admittedly, this example is overly simplified, but it gives you a general idea.

Programmer optimize their control flow in a similar way. In a machine-learning environment where data sets grow very large, RETE is able to sort through the thousands of rules more efficiently than a human programmer. However, many kinds of applications have policies and rules that are fairly straightforward and don't involve thousands of machine-learned factoids.

This article by Loic Tregan of eBay from the W3C Workshop on Rule Languages for Interoperability questions the usefulness of RETE with greater scrutiny. To quote a portion of the article:

"Using two industrial implementations of the RETE and a sequential engine, both coming from the same vendor, we found a x10 factor degradation in performance, start-up time and used memory. Do we really need RETE ? ...We believe many transactional, stateless systems are best suited with simple sequential rules processing that do not require forward-chaining.

Tom Debevoise, author of "Business Process Management With a Business Rules Approach: Implementing the Service Oriented Architecture", wrote

this article to suggest that RETE is not an appropriate choice for the majority of business rules:

"A good business rule mining team will strive to build concise, self-contained logic in the statements. Unless, they are building a diagnostic or expert system, the outcome is usually a small ruleset for each business area.

The article goes on to describe how well-written business rules tend to adhere to some common principles that make them less likely candidates for RETE processing.

It turns out that modern day compilers are also well-optimized tools for evaluating conditions, and they do a pretty good job of it. Modern compilers can take a run-on if-else clause or a poorly implemented control structure and simplify it down to a fairly streamlined set of instructions.

So why not use compiler optimizations to streamline the evaluation of conditional program flows such as rule sets? Mainly because you don't want to have to write your rules in C and compile them.

A scripting language, on the other hand, doesn't need to be compiled first. Most rule sets are best expressed as simple scripts, anyway. Some rules engines refer to their rules as "scripts", although they may have a much steeper learning curve than most scripting languages . Indeed, it has been said that expert systems "qualify as programming languages, although certainly with a narrower range of application than most programming languages"[

1].

So why not implement rules in a scripting language? Well, there is a perception that scripts are slow. Unfortunately, it's not really an accurate perception. There are some circumstances where scripts can perform very well. We need to take a look at what happens when you run a script.

Most of the time, when you run a script, it gets run through an interpreter that turns your script into byte code. This phase is called compiling (scripts do get compiled at runtime). Next, the byte code is executed by the script engine, be it Spidermonkey, Zend, Perl or what have you. The next time you run the script, the whole process starts over again. And this process of converting the script into bytecode is where the real performance pinch can begin to be felt.

There is however, a nice solution to this problem called a

bytecode cache. What it does is it holds onto the bytecode that the interpreter produces, and keeps it on hand for the next time the script runs, instead of compiling it all over again. This saves a lot of time.

A server, however it is implemented, must communicate with other network components via some protocol. In an IP network, solutions like XML-RPC and SOAP have become popular choices. Either one is better than deciphering bytes when you're trying to get things working.

The basic requirements so far consist of Operating System, HTTP Server, Scripting Language, and Database, which sounds a lot like like

LAMP. The basic Linux-Apache-MySql-PHP combo in any of its themes and variations will do. LAMP doesn't have commercial backing per se, but it is a robust, secure and proven technology with a

vast market share. It has been leveraged to provide carrier-grade, highly scalable services in an IP network.

With this proposal in mind, let's proceed to take a look at the installation and configuration of the components we need to build and test our rules-based policy engine.

Here are the basic components you can use to build a prototype. You might recognize that the same configuration is used in hundreds of Internet applications from Amazon to Facebook to... Zembly. It's the ubiquitous Linux, Apache, mySQL, and PHP combination and the popular

APC cache. Many top-tier web applications use this combination to execute policy functions, and application logic. Much work in Service Oriented Architectures (SOA) and Software as a Service (SaaS) has made use of this approach [

PDF article, IT Professional, January 2007].

For deployment, the ideal platform is a multi-CPU dedicated server, but for experimenting, a LAMP stack running on a VMware virtual server is a good option. You can use

VMware Server or

VMware Player to create a virtual machine. Mac users can use VMware Fusion. I won't go into the details of installing these products and setting up a virtual server, as the product documentation itself is pretty good.

VMware Fusion - Setting up Ubuntu jeOS from an iso disk image

VMware Fusion - Setting up Ubuntu jeOS from an iso disk image

I chose to use

jeos (pronounced "juice") from Ubuntu. Jeos is an edition of Ubuntu server that's been trimmed down especially for use on virtual machines. It comes with a minimal set of applications installed, so if your not comfortable with configuring a Linux server or prefer a graphical desktop environment, you may wish to install

Ubuntu Desktop instead. For production I'd recommend Ubuntu Server or CentOS. Again, the installation of the operating system is well documented on the

Ubuntu website, so please follow the instructions there.

Ubuntu jeOS Installation

Ubuntu jeOS Installation

Apache, PHP and MySQL can be installed from the command line with this one-liner:

> tasksel install lamp-server

You may wish to install

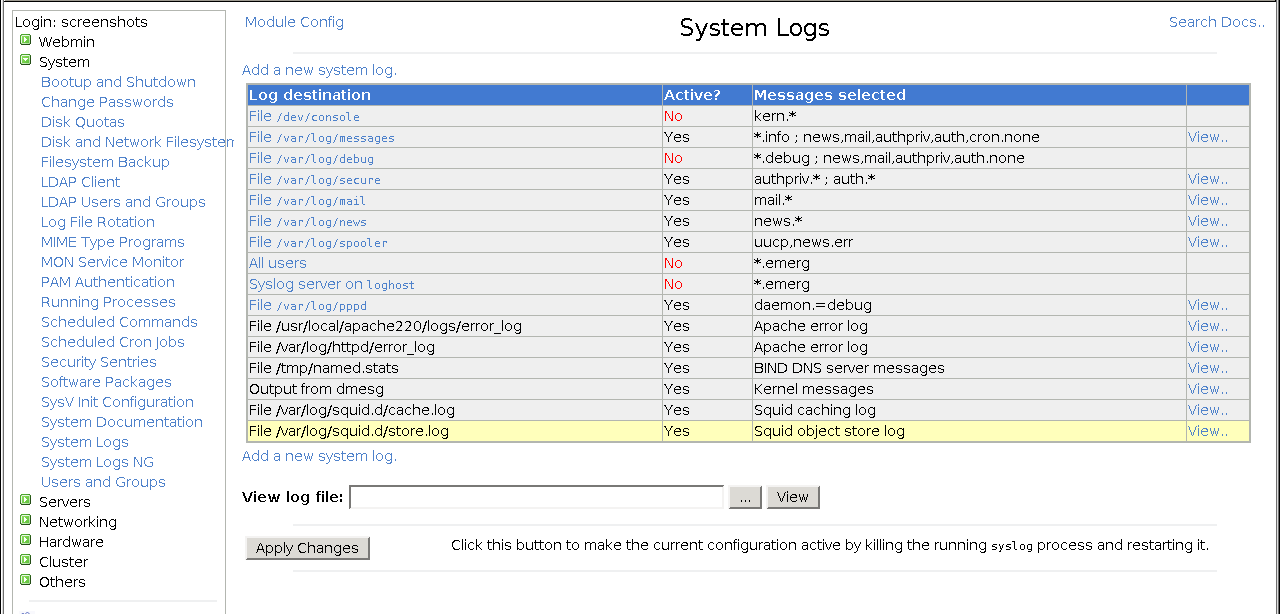

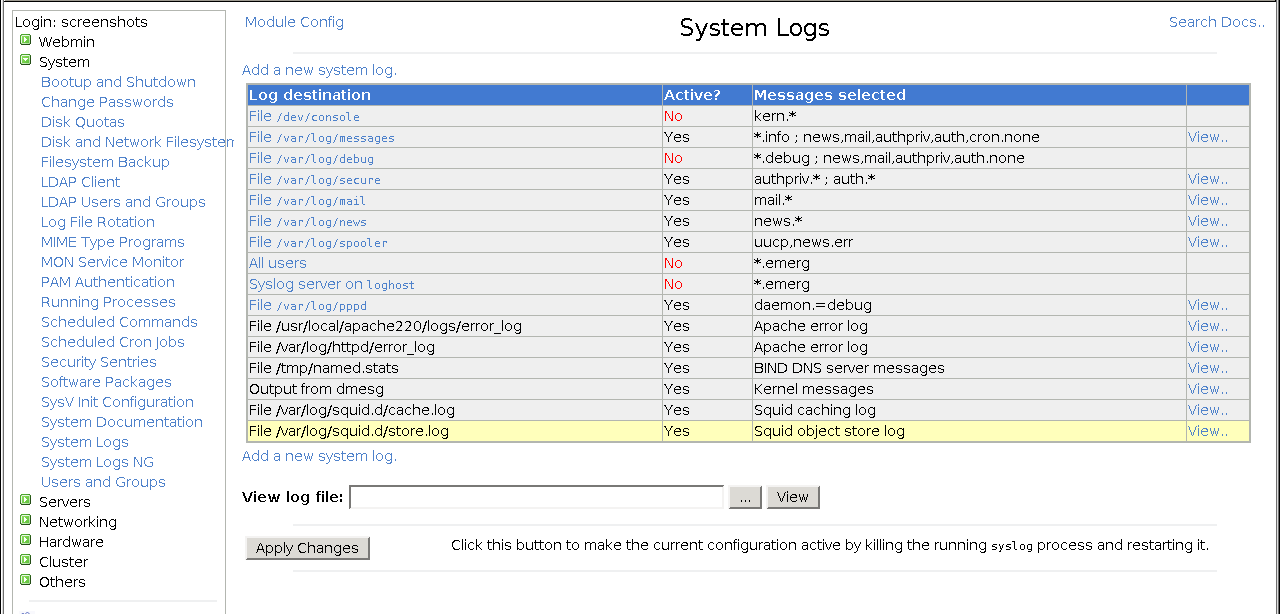

Webmin, a web-based server administration tool. It's not necessary, but it's useful for server administration. The instructions for installing on Debian / Ubuntu can be found

here.

A screenshot of Webmin's System Logs configuration

A screenshot of Webmin's System Logs configuration

Next, install

phpMyAdmin, a web based MySql administration tool.

> apt-get install phpmyadmin

Visit the phpMyAdmin web site for

installation and configuration instructions. You should be able to launch a browser on your host operating system and connect to the the Apache web server on the virtual machine. Run 'ifconfig' on the VM to find it's IP address, then connect to http://[ip address]/phpmyadmin. If this doesn't work for you straight away, see

http://www.jumpbox.com/node/816 for troubleshooting tips.

phpMyAdmin Screen

phpMyAdmin Screen

The last piece of software to set up is APC, the Alternative PHP Cache. Prior to installing APC, you may need to install the following as well:

> apt-get install make

> apt-get install php5-dev

> apt-get install apache2-threaded-dev

> apt-get install php-pear

Now you should be able to fetch APC using PECL:

> pecl install APC

> vi /etc/php5/apache2/php.ini

Add the following lines to the php.ini file:

extension=apc.so

apc.shm_size = 32

Copy the APC user interface page to your docroot:

> cp /usr/share/php/apc.php /var/www

Additional info on installing APC:

http://www.linuxweblog.com/node/576

http://www.vbulletin.com/forum/archive/index.php/t-165367-p-2.html

At this point you should be able to point the web browser on your host system to the webserver running on your VM and connect to:

http://[vm's IP address]/apc.php

You should see the GUI for APC at that URL.

APC "Alternative PHP Cache" GUI

APC "Alternative PHP Cache" GUI

Setting up all of this is the hardest part of the whole affair. Once you have the environment set up, the rest will be a breeze (I promise)!

LAMP is an ideal solution for providing policy-based decision-making functions for network applications, and in fact, is already used in this capacity in a number of massively multi-user web applications. A key component to providing the required speed and scalability is a byte-code cache, such as the perennial favorite of LAMP developers: the Alternative Bytecode Cache. When dealing with the typical set of policies for many kinds of applications, this approach has benefits over some well-known rules engines that can include better performance, ease of use, and speed of implementation.

Now that the basic requirements are in place, let's review the system.

A Complete System

The first point may come as a surprise: if you've set up your LAMP server and APC cache, you've already got your rules engine. You can start using it (almost) right away. A LAMP stack is many things, and one of the things it is, is a platform to evaluate application logic, including business rules, policies, etc. There will be a few improvements and features to add - nothing difficult - but in its most basic form, the whole thing is ready to roll.

File-based, Scripted Rules

APC works with files. The rules that we write will be stored as files in Apache's docroot directory, and they'll be accessed via HTTP like any other web application. Personally, I like the idea that the rules are files. They're like config files, and it makes it really easy to have an htaccess-protected staging area and another live-deployment area (firewalled, private network segment, password protected, etc).

A CGI-Like Interface

Once you write a script and put it in the web-server's document root, it's accessible over HTTP (via a web browser, wget, curl, a telnet session, the Firefox RESTClient plugin, or a custom web-client). To Apache, the scripts are just another web page written with PHP. They're very similar to CGI scripts (in fact, they are CGIs because they conform to the Common Gateway Interface specification) and will output some kind of text (like XML, for example). You may want some other output format, but that part of it is trivial.

Testing via a Form

The rules/scripts can be tested through a simple web page with an HTML form and a POST query. A custom-built client would just open a socket and write out the appropriately formatted bytes. There's an elegant simplicity about the attribute-value pairs submitted in a POST query, but alternatives like XML-RPC are also nice for readability. SimpleXML, included in PHP, is a handy way to parse it.

Caching Benefits

APC cache transparently caches the bytecode of your scripts. You'll be able to see this using the APC GUI, and it will provide a dramatic speed-up over the per-query parsing and compiling required when there is no caching.

Parameter Caching

Your script is likely to use other criteria that may be stored in a database or come from system calls (like date and time) or other external sources. Rather than query these every time, APC cache can also be used to store variables for use in consecutive executions of your bytecode. APC cache allows you to store variables in simple attribute-value-pairs, with a duration to indicate how long it should be cached for. Once it expires, it'll be removed from the cache and the variable can be re-fetched. This is great for caching variables that don't change very often. For example, you might cache information about the weather and only update it every 5 minutes. If you get 1,000 queries in the meantime, you've just saved 999 queries to your weather server.

Additional features, like the ability to group rules together into rule-sets, and a user interface, are both trivial to do in the LAMP environment.

You may also want to optimize your installation of mySQL to enable query caching. This can offer some noticeable improvement when the same queries are generated frequently.

Getting Over the "Scripting" Stigma

I realizing there are many computer experts out there who enjoy criticizing PHP almost as much as they enjoy denouncing Microsoft, so I'd like to share something I picked up from the world of music. In my experience, people who denigrate a certain style of music are typically not musicians themselves. I've worked with some very talented musicians over the years, and I've always found the best ones are also the most gracious, and have a vast palette of musical appreciation. Similarly, in programming, the best programmers I've known have an appreciation for a variety of languages and technologies, recognize that there's a time and a place for each one, and are not be too hasty to condemn one or the other. Sometimes, the criticism is just due to a lack of familiarity. I hope that this will encourage readers and software developers working in the area of business process managment, policy and application servers, rules engines, web services, the Internet and telecommunications to explore the possibilities offered by this elegant technology.

Here's how to make a bootable USB flash drive (thumb drive) on Windows XP without using a floppy disk.

Here's how to make a bootable USB flash drive (thumb drive) on Windows XP without using a floppy disk.