©2012 Darren DeRidder

Friday, December 14, 2012

Monday, December 10, 2012

Sunday, December 9, 2012

Saturday, December 8, 2012

Thursday, December 6, 2012

Pub Night

Ishigaki, Japan

©2012 Darren DeRidder

Whenever I visit Japan I'm always struck by the odd sense of scale. Everything is slightly more compact, and space is used much more efficiently than we're used to in North America. This seems especially evident in cities where the compactness of buildings has an additive effect that can skew my perception of distance and perspective. What appears to be a large office building in the far distance can turn out to be a a mere 1-minute walk away, and fit within four of our typical parking spaces. Of course, there are large buildings in Japan, big shopping arcades and all, but the way things are arranged within them is still done with an eye towards efficiency and flow that's unfamiliar to someone who's used to rambling through the chaotic unkempt aisles of their suburban big box shopping plaza. It's one of the things I like about Japan. The tight and tidy use of space, the feeling that its a precious commodity, and that it's used with respect and gratitude. This scene, taken in what you might call a "back alley" in downtown Ishigaki, isn't at all atypical. Tucked between a row of darkened doors, a little pub, open late, with the noren curtain veiling the entrance and a warm glow spilling out onto the pavement through the glass panes of the sliding doors. Irashaimase! yells the owner as you duck inside. Welcome!

Wednesday, December 5, 2012

Tuesday, December 4, 2012

Saturday, December 1, 2012

Friday, November 30, 2012

Tuesday, November 27, 2012

Testing REST APIs with RESTClient and Selenium IDE

Update: You may also be interested in Testing Express.JS REST APIs with Mocha.

Tools used to test HTTP-based REST APIs include command-line utilities like curl and wget, as well as full-featured test frameworks like Mocha. Another interesting option is RESTClient, a plugin for Firefox that provides a user-friendly interface for testing REST APIs right from your browser.

Since RESTClient is a plugin, its made up of locally stored XML, HTML, CSS, JavaScript and RDF files that Mozilla refers to collectively as "chrome". It turns out that because RESTClient is built mainly with HTML, you can actually use Selenium IDE to control it and run through automated test cases.

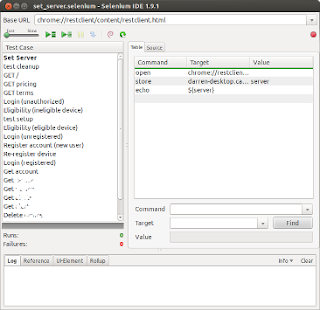

Selenium IDE is used to run tests on regular web pages, but I've never seen it used to control another Firefox plugin before. I gave it a try and discovered that it does this beautifully. Here's a picture of Selenium IDE driving RESTClient.

It's pretty cool to see Selenium IDE driving the RESTClient tool and knocking off all these test cases as it queries the API. I can run through an entire suite of tests, from setup to authentication, creating, retrieving, updating, and deleting resources, to final tear-down. I can drill down into any test cases that are failing and step through them to identify exactly what has gone wrong. As a bonus, the nicely formatted output can be cut-and-pasted to create some pretty nice looking documentation in my client developer's guide.

Using RESTClient's Favourite Requests

One way to start using Selenium IDE to drive RESTClient is to set up a collection of "Favorite Requests" in RESTClient. Once your favourite requests have been configured, its relatively easy to create test cases in Selenium IDE to drive them.

This is not the best way to combine these tools, however. It means you'll have to make sure your favourite requests are loaded into RESTClient before starting your Selenium test suite. Your requests will probably be hard-coded so they're not easy to share. And if you do want to run the tests on another system, you'll need to export your RESTClient faves as well as the Selenium tests.

Driving RESTClient Programmatically

A better way is to drive RESTClient completely programmatically. Instead of using a list of preconfigured requests in RESTClient, it would be really to just create the requests on-the-fly, programmatically from within your Selenium test suite. And this is completely possible.

Let's run through a few of the steps that you might need to accomplish this.

Selecting the Base URL

When you save your Selenium IDE test suite, the Base URL will define the starting point for your tests. This should be set to chrome://restclient/content/restclient.html

You can also launch RESTClient automatically from the first line of your first test case using the openWindow command:

openWindow | chrome://restclient/content/restclient.html

Making the API Server URL configurable

Depending on who's doing the testing, the server where the API is running will probably be different. If you want other people to be able to run your test suite against different servers you should at least make the hostname semi-configurable. You can do this by storing the hostname in a local variable that gets re-used for subsequent requests, like so:

store | darren.xyzzy.ca:3000 | server

echo | ${server}

That last line is just showing you how to access the stored "server" variable.

Test Preparation

I have a special URI that is available in development mode only, that allows initial set up of test data. This is the Selenese code I use to set up a call to that URI and confirm that test setup is complete:

click | css=i.request-method-icon.icon-chevron-down

click | link=GET

type | id=request-url | https://${server}/test/setup

click | id=request-button

click | link=Response Body

waitForElementPresent | css=#response-body-raw > pre

waitForTextPresent | test setup complete

Most of those commands were created using the "Record" button in Selenium IDE; then I hand edited a few of them to get the right behaviour. The most interesting line of the test case above is the "type" command. This enters text into the "request-url" input box, and it uses the ${server} variable to fill in the hostname:port of the API server.

Storing and using variable URL parameters

Just like we did with the "server" variable, you'll probably want to save and re-use other parameters that you get back from your REST API. Here's how you would extract an Account ID from a JSON response, for example after you issue a POST request to create a new account, and store it for use in subsequent requests.

... commands to query the API ...

click | Link=Response Body (Raw)

waitForElementPresent | css=#response-body-raw > pre

verifyTextPresent | Created account

storeText | css=#response-body-raw > pre | response

storeEval | JSON.parse(storedVars['response']).accountId | accountId

echo | ${accountId}

...

The interesting commands to note here are the "storeText" and "storeEval" commands. The storeText command stores the entire body of the response in a variable called "response". The next command, storeEval, creates an object from the JSON response by retrieving the response text from storedVars['response'] and passing it into JSON.parse(). Then it selects the accountId property, and stores it in a local variable called, unsurprisingly, "accountId". If subsequent request URLs are based on the accountId, we can use it just like the server parameter:

type | id=request-url | https://${server}/accounts/${accountId}/messages

If there are other properties of the JSON response body that we want to store for later use, we can use the same technique to get them, too.

Off to the races...

With these basic techniques you should be up and running RESTClient queries with Selenium IDE in no time. Then you can launch your test suite and go grab a nice cup of coffee while your computer merrily churns out one test case after another and your browser magically performs the steps in sequence. It's highly entertaining. But it has real practical value, too, as you're sure to find out when developing a client app and you need to troubleshoot the API communication.

Have fun!

Tuesday, September 4, 2012

Till We Meet Again

Evening at Lac Philippe, Quebec.

© 2012 Darren DeRidder

MK: a milestone year and a memorable summer. Prost!

Wednesday, August 29, 2012

Using local npm executables

When developing NodeJS apps, it used to be common practice to install module dependencies in a central node_modules folder. Now, it's recommended to install dependencies for each project separately. This allows fine-grained control over specific modules and versions needed for each application and simplifies installation of your package when you're ready to ship it. It does require more disk space, but these modules are mostly very small.

For example if your project had this package.json file, running npm -install would create a node_modules sub-directory and install express and mocha into it:

It's also fairly common to install some modules globally, using npm -g, if they provide command-line utilities. For example, if you wanted to have jslint available to all users on your system, you'd do this:

which would place this in /usr/local/bin/jslint.

If you specify a dependency in your package.json file, and that module includes an executable -- like jslint, for example, or mocha -- then npm install will automatically place those executables in your project's node_modules/.bin directory. To make use of these, add this prefix to your $PATH in your ~/.bashrc file:

export PATH=./node_modules/.bin:$PATH

This allows each one of your NodeJS projects to independently specify, install and use not only packages that are required in your JavaScript code, but also the command-line tools you need for testing, linting, or otherwise building your app.

For example if your project had this package.json file, running npm -install would create a node_modules sub-directory and install express and mocha into it:

{

"name": "awesome-app",

"version": "0.0.1",

"dependencies": {

"express": "3.0.0rc3"

,"mocha": "latest"

}

}

It's also fairly common to install some modules globally, using npm -g, if they provide command-line utilities. For example, if you wanted to have jslint available to all users on your system, you'd do this:

sudo npm -g install jslint

which would place this in /usr/local/bin/jslint.

If you specify a dependency in your package.json file, and that module includes an executable -- like jslint, for example, or mocha -- then npm install will automatically place those executables in your project's node_modules/.bin directory. To make use of these, add this prefix to your $PATH in your ~/.bashrc file:

export PATH=./node_modules/.bin:$PATH

This allows each one of your NodeJS projects to independently specify, install and use not only packages that are required in your JavaScript code, but also the command-line tools you need for testing, linting, or otherwise building your app.

Wednesday, August 22, 2012

Top Music Picks of 2011

When I started thinking about my top music picks for 2011, I had a hard time thinking of any one album that had really took the prize. Instead an odd collection of material surfaced: a couple of web videos of studio footage, online music clips from a film composer, a few of one-off tracks, plus a couple of albums that had been on the shelf long enough to qualify as "rediscoveries". It turns out there were also a bunch of new additions that got a lot of airplay last year. I've singled out a few of the favorites here and listed the rest in point form, and at the end included links to some of those music and video clips. Check them out!

Maria Joao and Mario Laghina - Cor (rediscovery)

This particular album has long been near the very top of my list of all time favorites, but it sat on the shelf long enough that when I took it out for a spin, it was a very nice rediscovery. It holds such a high position on my list of favorites that it definitely deserves a mention. Maria Joao is a very gifted and unusual vocalist from Portugal. She's able to do amazing things with her voice, and isn't afraid to experiment. Her long-time collaborator Mario Laginha's performance on the piano is technically dazzling, and Trilok Gurtu amazing percussion work rounds out the album to create something that is really hard to classify, a mix of avant-garde, world music and European jazz . For a while, I had the opening track set to play as my morning wake-up alarm. The hushed sounds of an early-morning city scape and the soft, slumbering opening chords accompany an almost hymn-like vocal melody that slowly rises, flitters and then soars like a bird into a new day. This is an album that not everyone can appreciate, but those who do are likely to cherish it.

Daniel Goyone - l'heurs bleu (rediscovery)

The French jazz composer and pianist Daniel Goyone may not be well known in North America, but those whose musical tastes extend to European jazz have probably heard of him. Like other European artists, Daniel Goyone has taken the Great American Art Form and combined it with local influences to create something unique and highly sophisticated. His evocative compositions meander between plaintive and sanguine, floating somewhere between major and minor, never seeming to settle. The unique combination of instruments, including bandeon, violin, marimba, oboe, and the aforementioned Trilok Gurtu on percussion, create something that's again hard to classify but sits somewhere between jazz, chamber music and traditional French folk music. There are a few albums by Daniel Goyone that I've tried to get hold of; unfortunately they are very hard to find.

Brian Browne Trio - The Erindale Sessions

My biggest musical influence is undoubtedly the great Canadian jazz pianist Brian Browne. I first saw Brian playing at the Ottawa International Jazz Festival in 1999, and later was fortunate enough to study piano with him. A couple of years ago, Brian told me of an old acquaintance who had discovered a collection of master tapes from a series of concerts that the Brian Browne Trio gave at the Erindale Secondary School library. A teacher there by the name of Marvin Munshaw, who happened to be a huge jazz fan, brought the trio to the school and recorded them on a reel-to-reel deck. After some extensive remastering, the result is "The Erindale Sessions", featuring the original Brian Browne Trio as they sounded in the late 70's.

I mentioned this album last year before it was commercially available. It now is available, on iTunes and also direct from brianbrowne.com by mail order. My favorite tracks are probably "Blues for the UFOs", "Stompin' at the Savoy", and "St. Thomas", but the whole album is a treasure.

Yellowjackets - Timeline

The Yellowjackets are my favorite band of all time. For many Yellowjackets fans, the return of William Kennedy must have seemed too good to be true. Although Peter Erskine and Markus Baylor both did some fantastic drum work over the years since he left the band, William Kennedy's unique, polyrythmic style was a big part of the sound that people came to identify with the 'Jackets. And he doesn't disappoint on this album, which marks the band's 30th anniversary.

The Yellowjackets are my favorite band of all time. For many Yellowjackets fans, the return of William Kennedy must have seemed too good to be true. Although Peter Erskine and Markus Baylor both did some fantastic drum work over the years since he left the band, William Kennedy's unique, polyrythmic style was a big part of the sound that people came to identify with the 'Jackets. And he doesn't disappoint on this album, which marks the band's 30th anniversary.

The opening track "Why Is It" features all the rythmic and melodic intensity you'd expect from the Yellowjackets, but the overall tone of the album more reflective, characterized by several richly crafted ballads, especially the title track "Timeline", the lush ballad "A Single Step", and the lyrical closing song "I Do". Beautiful work from an exceptional band, who after 30 years is still producing some of the finest electro-acoustic jazz ever.

Peter Martin - Set of Five

The piano music of Peter Martin was an exciting Youtube discovery, with his great 3-minute jazz piano clips. His short-play album "Set of Five" features five solo piano pieces that are all excellent, range from hard-bop to ballads, and wrap up with an amazing jazz cover of Coldplay's Viva la Vida.

The piano music of Peter Martin was an exciting Youtube discovery, with his great 3-minute jazz piano clips. His short-play album "Set of Five" features five solo piano pieces that are all excellent, range from hard-bop to ballads, and wrap up with an amazing jazz cover of Coldplay's Viva la Vida.

Peter Martin's playing is technically brilliant, easy to listen to, and steeped in the best jazz piano traditions with a flair for originality and innovative arrangements. Definitely recommended. The album is available for download on iTunes. Check out Peter Martin's web site http://petermartinmusic.com for more on this talented pianist.

Bernie Senensky - Rhapsody

Bernie Senensky is another great Canadian jazz pianist. He has recorded quite a few albums, but it wasn't until my musical mentor Brian Browne played a few tracks from this CD for me that I got switched on to his music. The interpretations of standards on this album are creative and technically impressive. Top notch jazz piano trio music.

Other albums that were favorites of 2011 include the following, all of which are highly recommended!

David Norland - Instrumentals from the Soundtrack to "Anvil: The Story of Anvil"

It seems a little strange that a film about a heavy metal band from Toronto could feature such nice background music. Somehow it worked, and contrasted beautifully with the over-the-top metal tunes Anvil is known for. The movie is actually a hilarious and moving documentary about a band that never quite made it to the big time, but refused to give up. David Norland's scoring for the film lent it a very human touch. Especially the tune "Shibuya", which you can hear in the Audio section, which is played in the film's closing scene, really captured the feeling of the story.

Check out the audio clips here:

http://www.davidnorland.com/

Lyle Mays demos Spectrasonics Trillian with Alex Acuna

Lyle Mays album "Solo Improvisations for Extended Piano" was mentioned above. It's excellent, but here you can actually watch him performing live on a MIDIfied grand piano and synth, together with Alex Acuna, in HD video and high-quality audio.

http://vimeo.com/11854911

http://vimeo.com/11852047

Maria Joao and Mario Laghina - Cor (rediscovery)

This particular album has long been near the very top of my list of all time favorites, but it sat on the shelf long enough that when I took it out for a spin, it was a very nice rediscovery. It holds such a high position on my list of favorites that it definitely deserves a mention. Maria Joao is a very gifted and unusual vocalist from Portugal. She's able to do amazing things with her voice, and isn't afraid to experiment. Her long-time collaborator Mario Laginha's performance on the piano is technically dazzling, and Trilok Gurtu amazing percussion work rounds out the album to create something that is really hard to classify, a mix of avant-garde, world music and European jazz . For a while, I had the opening track set to play as my morning wake-up alarm. The hushed sounds of an early-morning city scape and the soft, slumbering opening chords accompany an almost hymn-like vocal melody that slowly rises, flitters and then soars like a bird into a new day. This is an album that not everyone can appreciate, but those who do are likely to cherish it.

Daniel Goyone - l'heurs bleu (rediscovery)

The French jazz composer and pianist Daniel Goyone may not be well known in North America, but those whose musical tastes extend to European jazz have probably heard of him. Like other European artists, Daniel Goyone has taken the Great American Art Form and combined it with local influences to create something unique and highly sophisticated. His evocative compositions meander between plaintive and sanguine, floating somewhere between major and minor, never seeming to settle. The unique combination of instruments, including bandeon, violin, marimba, oboe, and the aforementioned Trilok Gurtu on percussion, create something that's again hard to classify but sits somewhere between jazz, chamber music and traditional French folk music. There are a few albums by Daniel Goyone that I've tried to get hold of; unfortunately they are very hard to find.

Brian Browne Trio - The Erindale Sessions

My biggest musical influence is undoubtedly the great Canadian jazz pianist Brian Browne. I first saw Brian playing at the Ottawa International Jazz Festival in 1999, and later was fortunate enough to study piano with him. A couple of years ago, Brian told me of an old acquaintance who had discovered a collection of master tapes from a series of concerts that the Brian Browne Trio gave at the Erindale Secondary School library. A teacher there by the name of Marvin Munshaw, who happened to be a huge jazz fan, brought the trio to the school and recorded them on a reel-to-reel deck. After some extensive remastering, the result is "The Erindale Sessions", featuring the original Brian Browne Trio as they sounded in the late 70's.

I mentioned this album last year before it was commercially available. It now is available, on iTunes and also direct from brianbrowne.com by mail order. My favorite tracks are probably "Blues for the UFOs", "Stompin' at the Savoy", and "St. Thomas", but the whole album is a treasure.

Yellowjackets - Timeline

The Yellowjackets are my favorite band of all time. For many Yellowjackets fans, the return of William Kennedy must have seemed too good to be true. Although Peter Erskine and Markus Baylor both did some fantastic drum work over the years since he left the band, William Kennedy's unique, polyrythmic style was a big part of the sound that people came to identify with the 'Jackets. And he doesn't disappoint on this album, which marks the band's 30th anniversary.

The Yellowjackets are my favorite band of all time. For many Yellowjackets fans, the return of William Kennedy must have seemed too good to be true. Although Peter Erskine and Markus Baylor both did some fantastic drum work over the years since he left the band, William Kennedy's unique, polyrythmic style was a big part of the sound that people came to identify with the 'Jackets. And he doesn't disappoint on this album, which marks the band's 30th anniversary.The opening track "Why Is It" features all the rythmic and melodic intensity you'd expect from the Yellowjackets, but the overall tone of the album more reflective, characterized by several richly crafted ballads, especially the title track "Timeline", the lush ballad "A Single Step", and the lyrical closing song "I Do". Beautiful work from an exceptional band, who after 30 years is still producing some of the finest electro-acoustic jazz ever.

Peter Martin - Set of Five

The piano music of Peter Martin was an exciting Youtube discovery, with his great 3-minute jazz piano clips. His short-play album "Set of Five" features five solo piano pieces that are all excellent, range from hard-bop to ballads, and wrap up with an amazing jazz cover of Coldplay's Viva la Vida.

The piano music of Peter Martin was an exciting Youtube discovery, with his great 3-minute jazz piano clips. His short-play album "Set of Five" features five solo piano pieces that are all excellent, range from hard-bop to ballads, and wrap up with an amazing jazz cover of Coldplay's Viva la Vida.Peter Martin's playing is technically brilliant, easy to listen to, and steeped in the best jazz piano traditions with a flair for originality and innovative arrangements. Definitely recommended. The album is available for download on iTunes. Check out Peter Martin's web site http://petermartinmusic.com for more on this talented pianist.

Bernie Senensky - Rhapsody

Bernie Senensky is another great Canadian jazz pianist. He has recorded quite a few albums, but it wasn't until my musical mentor Brian Browne played a few tracks from this CD for me that I got switched on to his music. The interpretations of standards on this album are creative and technically impressive. Top notch jazz piano trio music.

Other albums that were favorites of 2011 include the following, all of which are highly recommended!

- Bob Van Asperen, Orchestra of the Age of Enlightenment - Handel's Organ Concerto's Opus 4

- Brad Mehldau - Highway Rider

- Charlie Haden & Hank Jones - Steal Away

- Lyle Mays - Solo Improvisations for Expanded Piano

- Bruce Cockburn - Life Short Call Now

- Keith Jarrett & Charlie Haden - Jasmine

- Pat Metheny - Secret Story

- Empire of the Sun - Walking On a Dream (Special Edition)

- Bill Evans - The Paris Concert

David Norland - Instrumentals from the Soundtrack to "Anvil: The Story of Anvil"

It seems a little strange that a film about a heavy metal band from Toronto could feature such nice background music. Somehow it worked, and contrasted beautifully with the over-the-top metal tunes Anvil is known for. The movie is actually a hilarious and moving documentary about a band that never quite made it to the big time, but refused to give up. David Norland's scoring for the film lent it a very human touch. Especially the tune "Shibuya", which you can hear in the Audio section, which is played in the film's closing scene, really captured the feeling of the story.

Check out the audio clips here:

http://www.davidnorland.com/

Lyle Mays demos Spectrasonics Trillian with Alex Acuna

Lyle Mays album "Solo Improvisations for Extended Piano" was mentioned above. It's excellent, but here you can actually watch him performing live on a MIDIfied grand piano and synth, together with Alex Acuna, in HD video and high-quality audio.

http://vimeo.com/11854911

http://vimeo.com/11852047

Saturday, August 11, 2012

A Simple Intro to MVC, PubSub and Chaining in 20 Lines of JavaScript

In this post I want to present three JavaScript topics - MVC, Chaining, and PubSub - using the simplest possible examples in code.

Javascript MVC frameworks have been getting a lot of attention, as sites like TodoMVC demonstrate, but the number of choices can be a little overwhelming. It really helps to have a good understanding of MVC first before you delve into some of the more sophisticated frameworks.

Model-View-Controller is mainly about separating the concerns of managing data (the Model), displaying it to the user (the View), handling user input and performing actions on the data (the Controller). Exactly how the data in the Model makes its way to the View varies, but organizing your application in terms of these roles can help prevent messy, unmaintainable spaghetti code.

To follow this tutorial, use the Firebug console, or Chrome's developer tools to type in and run the code. Here's the full example running in Firebug; as you can see it's not a lot of code.

As you'd expect, our code will include a model, a view, and a controller.

Our model should hold some data. Since this is the simplest possible example, it will just hold one variable, a counter.

Our View is also going to be super simple: just printing to the console. It has a function called "update" to print something to the console.

The Model and the View don't know about each other directly. The Controller knows about them both, though, and it handles user input. To keep things simple, user input is via the console, too. The controller is going to have a function called "count" that we'll invoke from the console.

Here it is all together: MVC in 20 lines of JavaScript. After all it's not that complicated.

Each time we invoke the Controller.count function, it updates the model and passes it to the view, which displays the new data.

Of course this is over simplified. It leaves lots of room for improvement. So, let's move on to JavaScript chaining.

If we call Controller.count() several times, we can see the data incrementing. If you're using Firebug, you'll see the data being displayed in our View (the console), followed by the word "undefined". That's because the count() function isn't returning any value; the result of running this function is undefined. If count() returned a value, the console would display it instead of "undefined". So, what if our count() function returned "this", i.e. it returns the Controller object itself? Then when we call Controller.count(), it would return the same Controller object back to us again, and we could do this:

This is pretty easy to implement:

So that is chaining explained with two lines of JavaScript. Neat, huh? Now let's move on to the last concept.

Notice that in the simple MVC example, the Controller updates the Model and then it has to tell the View to update, passing in the new data. This is fine if you only have one view, but what if you have several views of the same model? And what if you want to be able to add or remove views? This is when manually updating views in the Controller starts to become a pain. It would be nice if the model could just announce that it got updated, and the views could listen for this announcement and update automatically whenever the model changed.

Publisher-Subscriber (aka. PubSub) is a variation of the Observer pattern that implements this idea. I'm not going to implement the whole PubSub pattern here, just the bare minimum to get the idea across.

To start with we'll have a list of subscribers, and a function to publish information to them. It just loops through all the subscribers and updates them.

Then our Model needs to announce that it was updated, so instead of modifying the counter directly, we'll give it a function called "incr" and use that to increment the counter. This function will modify the data and then announce it to the subscribers using the "publish" function.

Finally the Controller, instead of manually updating the model and view, will just call the model's incr function and let the PubSub pattern take care of updating the views.

Here it is all together:

So there is MVC, Chaining and PubSub stripped down to their bare-bones essentials in about 20 lines of JavaScript. Obviously, this isn't a complete implementation of any of these patterns, but I think it makes the basic underlying ideas clear. After reading this and trying out the code in a JavaScript console, you might have a better idea of what a JavaScript MVC framework is good for, how it works, and what kind of features you like best.

Javascript MVC frameworks have been getting a lot of attention, as sites like TodoMVC demonstrate, but the number of choices can be a little overwhelming. It really helps to have a good understanding of MVC first before you delve into some of the more sophisticated frameworks.

MVC

Model-View-Controller is mainly about separating the concerns of managing data (the Model), displaying it to the user (the View), handling user input and performing actions on the data (the Controller). Exactly how the data in the Model makes its way to the View varies, but organizing your application in terms of these roles can help prevent messy, unmaintainable spaghetti code.

To follow this tutorial, use the Firebug console, or Chrome's developer tools to type in and run the code. Here's the full example running in Firebug; as you can see it's not a lot of code.

As you'd expect, our code will include a model, a view, and a controller.

Our model should hold some data. Since this is the simplest possible example, it will just hold one variable, a counter.

Our View is also going to be super simple: just printing to the console. It has a function called "update" to print something to the console.

The Model and the View don't know about each other directly. The Controller knows about them both, though, and it handles user input. To keep things simple, user input is via the console, too. The controller is going to have a function called "count" that we'll invoke from the console.

Here it is all together: MVC in 20 lines of JavaScript. After all it's not that complicated.

Each time we invoke the Controller.count function, it updates the model and passes it to the view, which displays the new data.

Of course this is over simplified. It leaves lots of room for improvement. So, let's move on to JavaScript chaining.

Chaining

If we call Controller.count() several times, we can see the data incrementing. If you're using Firebug, you'll see the data being displayed in our View (the console), followed by the word "undefined". That's because the count() function isn't returning any value; the result of running this function is undefined. If count() returned a value, the console would display it instead of "undefined". So, what if our count() function returned "this", i.e. it returns the Controller object itself? Then when we call Controller.count(), it would return the same Controller object back to us again, and we could do this:

This is pretty easy to implement:

So that is chaining explained with two lines of JavaScript. Neat, huh? Now let's move on to the last concept.

PubSub

Notice that in the simple MVC example, the Controller updates the Model and then it has to tell the View to update, passing in the new data. This is fine if you only have one view, but what if you have several views of the same model? And what if you want to be able to add or remove views? This is when manually updating views in the Controller starts to become a pain. It would be nice if the model could just announce that it got updated, and the views could listen for this announcement and update automatically whenever the model changed.

Publisher-Subscriber (aka. PubSub) is a variation of the Observer pattern that implements this idea. I'm not going to implement the whole PubSub pattern here, just the bare minimum to get the idea across.

To start with we'll have a list of subscribers, and a function to publish information to them. It just loops through all the subscribers and updates them.

Then our Model needs to announce that it was updated, so instead of modifying the counter directly, we'll give it a function called "incr" and use that to increment the counter. This function will modify the data and then announce it to the subscribers using the "publish" function.

Finally the Controller, instead of manually updating the model and view, will just call the model's incr function and let the PubSub pattern take care of updating the views.

Here it is all together:

So there is MVC, Chaining and PubSub stripped down to their bare-bones essentials in about 20 lines of JavaScript. Obviously, this isn't a complete implementation of any of these patterns, but I think it makes the basic underlying ideas clear. After reading this and trying out the code in a JavaScript console, you might have a better idea of what a JavaScript MVC framework is good for, how it works, and what kind of features you like best.

Thursday, August 9, 2012

Serve gzipped files with Express.JS

Assuming you have minified, gzipped versions of your static javascript files already available (i.e. jquery.min.js.gz) you can serve the compressed files relatively easily. You don't really need to use on-the-fly gzip compression. The obvious benefit is faster page loading. Although it might seem like a small thing, saving a few kilobytes here and there in the midst of MB-sized downloads, such small performance optimizations can and *do* have a noticeable impact on responsiveness and overall user experience... just ask Thomas Fuchs.

If you had, for example, myscript.js, you could simply do this:

uglifyjs -nc myscript.js > myscript.min.js

And then with a simple bit of URL re-writing, serve the compressed version whenever a supported browser requests the minified version:

Express 2.x :

Express 3.x :

app.get('*.min.js', function (req, res, next) {

req.url = req.url + '.gz';

res.set('Content-Encoding', 'gzip');

next();

});

If you had, for example, myscript.js, you could simply do this:

uglifyjs -nc myscript.js > myscript.min.js

cat myscript.min.js | gzip > myscript.min.js.gz

And then with a simple bit of URL re-writing, serve the compressed version whenever a supported browser requests the minified version:

Express 2.x :

// basic URL rewrite to serve gzipped versions of *.min.js files

app.get('*.min.js', function (req, res, next) {

req.url = req.url + '.gz';

res.header('Content-Encoding', 'gzip');

next();

});

app.get('*.min.js', function (req, res, next) {

req.url = req.url + '.gz';

res.header('Content-Encoding', 'gzip');

next();

});

Express 3.x :

app.get('*.min.js', function (req, res, next) {

req.url = req.url + '.gz';

res.set('Content-Encoding', 'gzip');

next();

});

Now, if the browser requests "./js/myscript.min.js", it will actually get a copy of myscript.min.js.gz from that URL, without doing a redirect, and the browser will automatically decompress the file and use it in the normal way.

If your scripts are small to start with, the benefits won't be that great. Concatenating all your scripts together, then compressing them, could offer a major improvement over several smaller files.

Note that this doesn't check to see which files actually have gzipped versions available, so you would need to be diligent about keeping your files current, or write a simple function to cache a list of the available gzipped files at run time.

Tuesday, July 24, 2012

JavaScript Closures for 6-year-olds

When I first became interested in NodeJS I decided I needed to understand closures. They're supposed to be an advanced topic in JavaScript, but they're kind of fundamental to Node's asynchronous, callback driven continuation-passing style.

My background is mainly in C/C++, so unlike Ruby programmers, who have probably been exposed to closures, I had to study up on it.

After reading some excellent technical descriptions and layman's articles, I came across a Stack Overflow post asking "How do JavaScript Closures Work?", which is really good. The writer was asking how you might explain closures to a six-year-old, because Richard Feinman said that "If you can't explain it to a six-year-old, you don't really understand it yourself".

My background is mainly in C/C++, so unlike Ruby programmers, who have probably been exposed to closures, I had to study up on it.

After reading some excellent technical descriptions and layman's articles, I came across a Stack Overflow post asking "How do JavaScript Closures Work?", which is really good. The writer was asking how you might explain closures to a six-year-old, because Richard Feinman said that "If you can't explain it to a six-year-old, you don't really understand it yourself".

Here's my attempt at an explanation of closures for six-year-olds:

A man goes on a long trip, leaving his home and family behind. He's very fond of his home and before he leaves he takes a picture to bring with him. On his travels he is sometimes asked to describe his home. At such times, he takes out the photograph and begins to describe it. He talks about the white picket fence, the flower boxes by the windows, and the apple trees in the back yard.

While he's away, however, his family has decided to renovate and build a 3-story condo on the spot where the house is. Gone is the white picket fence. Gone are the apple trees. Gone are the flower boxes by the windows. The entire scene has changed. Yet the travelling man knows nothing about these changes, and continues to talk about his home as he remembers it, pulling out his worn photograph to show to strangers along the way. He describes the white picket fence, the flower boxes and the trees. Unaware of the changes being made while he is away, in his mind and in his pocket he carries a picture of home that is a closure - a snapshot of the way things were - and that is all he knows.

In JavaScript, when you return a function, its like a traveling man. The function will remember the scene where it came from, just the way it was.

var color = 'white';

function leaveHome () {

// a variable in leaveHome's scope

// a variable in leaveHome's scope

var description = "My house is " + color;

// returns another function, creating a closure over description

// returns another function, creating a closure over description

return function() {

console.log(description);

}

}

var travellingMan = leaveHome();

travellingMan(); // "My house is white"

color = 'red'; // They painted the house

travellingMan(); // "My house is white"

A couple points to notice:

- In JavaScript, functions are objects, too. Functions can create and return other functions.

- When you return a function or pass one as an argument, it doesn't need a name. It can be defined right in-line as an anonymous object.

- When the return function is created it "closes over" the variables in it's scope. In the example it takes a snapshot of the description variable.

Friday, July 20, 2012

Thursday, July 19, 2012

JSLint in Sublime Text 2

TLDR; Easy JSLint in Sublime Text 2, featuring auto-lint on save, jump to errors, menu shortcuts and preferences. Download it from github.

I've been using Sublime Text 2 as my go-to editor for JavaScript, and set up a custom build system in Sublime Text 2 to run JSLint and report errors automatically when saving a .js, .json, or .html file.

This has been working great. Whenever I save a file, it tells me immediately if there are any problems. Since I already have the file open with my recent changes, I can easily fix things up and re-save. There's no waiting for some monolithic build process to generate a report, which I may or may not pay attention to, or context switching between tools and screens to find the source of the problem. I set several options so it won't complain about typical NodeJS styles, and I make sure I get the "OK" when I save things. It keeps things very simple and as a result my code is all fully JSLint-ed.

If you have node installed, then all you need to do is install this package in Sublime Text. The install guide is on GitHub here:

https://github.com/darrenderidder/Sublime-JSLint

Update (22/01/2013): This package used to require the node-jslint module, but this didn't work well for Windows users. So I've added jslint directly into the package, and now the only dependency is to have node installed.

Update (13/05/2013): Jan Raasch added a bunch of cool features, like being able to lint an entire directory, and made some of the preferences easier to configure.

I've been using Sublime Text 2 as my go-to editor for JavaScript, and set up a custom build system in Sublime Text 2 to run JSLint and report errors automatically when saving a .js, .json, or .html file.

This has been working great. Whenever I save a file, it tells me immediately if there are any problems. Since I already have the file open with my recent changes, I can easily fix things up and re-save. There's no waiting for some monolithic build process to generate a report, which I may or may not pay attention to, or context switching between tools and screens to find the source of the problem. I set several options so it won't complain about typical NodeJS styles, and I make sure I get the "OK" when I save things. It keeps things very simple and as a result my code is all fully JSLint-ed.

If you have node installed, then all you need to do is install this package in Sublime Text. The install guide is on GitHub here:

https://github.com/darrenderidder/Sublime-JSLint

Update (22/01/2013): This package used to require the node-jslint module, but this didn't work well for Windows users. So I've added jslint directly into the package, and now the only dependency is to have node installed.

Update (13/05/2013): Jan Raasch added a bunch of cool features, like being able to lint an entire directory, and made some of the preferences easier to configure.

Wednesday, July 11, 2012

Selenium IDE Sideflow Update 2

I've updated the Sideflow (Selenium IDE Flowcontrol) plugin. You can download the latest version here: https://github.com/73rhodes/sideflow

The "gotolabel" command is renamed with camel-casing as "gotoLabel", so you may need to update your old Selenium tests if they use the old "gotolabel". Note that "goto" is a synonym for "gotoLabel", so if you wrote your tests using "goto" they should just keep working.

While I really appreciate contributions and suggestions, I've decided to remove the recent forEach additions. There were a few reasons for this.

To provide a simple way for users to build a collection, I added the "push" command. In Selenese, "push" works like you'd expect in JavaScript, except it will automatically create the array for you if it doesn't already exist.

So, to build up a collection, you can do this:

Note the use of 'getEval' to clear the collection first. Unlike 'runScript', 'getEval' runs in the context of the Selenium object, allowing us to modify storedVars.

To do something with every item in the collection (like foreach), you can do this:

The "gotolabel" command is renamed with camel-casing as "gotoLabel", so you may need to update your old Selenium tests if they use the old "gotolabel". Note that "goto" is a synonym for "gotoLabel", so if you wrote your tests using "goto" they should just keep working.

While I really appreciate contributions and suggestions, I've decided to remove the recent forEach additions. There were a few reasons for this.

- foreach functionality can be done with the existing while / endWhile commands.

- The implementation of foreach didn't actually provide a foreach command.

- Users reported errors in the new feature. Given (1) and (2) I wasn't really interested in debugging it.

To provide a simple way for users to build a collection, I added the "push" command. In Selenese, "push" works like you'd expect in JavaScript, except it will automatically create the array for you if it doesn't already exist.

So, to build up a collection, you can do this:

getEval | delete storedVars['mycollection']

push | {name: 'darren', status: 'groovy'} | mycollection

push | {name: 'basil', status: 'happy'} | mycollection

Note the use of 'getEval' to clear the collection first. Unlike 'runScript', 'getEval' runs in the context of the Selenium object, allowing us to modify storedVars.

To do something with every item in the collection (like foreach), you can do this:

storeEval | storedVars['mycollection'].length | x

echo | mycollection has ${x} items

while | storedVars['x'] > 0

storeEval | storedVars.mycollection[${x}-1] | temp

echo | ${temp}

storeEval | ${x}-1 | x

endWhileFriday, June 8, 2012

Simple Intro to Synchronization / Serialization in NodeJS

One of the most common topics that comes up with people who are new to NodeJS is how perform synchronous tasks in an asynchronous programming environment. There are two general responses to this:

Recently I wanted to create a little node script that would populate some tables in a database. I had a bunch of methods to do this, but they were all asynchronous functions and I needed to call them serially. For example, the first one would create a table. The second would populate some data in the table. And so on. Since my use case was fairly simple, and I didn't really want to download and start blindly using a flow control library to solve my problem, I decided to take it as an opportunity to see if I could create my own solution for serializing the function calls.

The resulting code is below. It's not especially pretty, or flexible. But after working it out, I felt that I did understand the general approach to serialization / synchronization in NodeJS a lot better. Hopefully looking at this simple example will shed some light on this for you as well.

To start with, I imported my init module. The functions I wanted to call synchronously are all exported by this module. I put these functions in an Array like this (note that I'm not actually invoking the functions here, just putting references to them in the array):

The next thing I did was create an array called "callbacks":

The idea is to create a bunch of special callbacks that will be invoked after each of the "steps" above. A callback function normally has parameters like (err, result), and a body that handles these. In addition to handling the err and result, however, when my callbacks were invoked I needed my callbacks to call the next step in the list. You can picture it something like this:

+--------+ +------+

| func_1 |--------->| cb_1 |

+--------+ +------+

|

+--------+ |

| func_2 |<------------+

+--------+

| +------+

+-------------->| cb_2 |

+------+

etc.

Ok, bad ascii art, but hopefully you get the idea. Function 1 gets called, finishes, and callback 1 is invoked. Callback 1 does the usual err / result handling and then invokes Function 2. Function 2 finishes and callback 2 is invoked. It does the usual err / result handling and then invokes Function 3, and so on.

For each of my "steps", I need to create one of these smart callbacks that knows the next function to call. I do that in a for loop, creating a custom callback for each function in the list of "steps".

Once the customized callbacks are all created, I start off the chain of events by calling the first function and passing in the first custom callback, like tipping the first domino in a row.

The part where we create the custom callbacks is the most interesting. Its like a little factory that creates callback functions. The createCallback function creates custom callbacks and returns them. The idea of a function returning another function is a little odd, if you're new to functional programming. But in JavaScript, a function can be passed around like any other variable. And here we are creating custom callback functions that know the next function in the list of steps. They also know that - if there is a next function to call - they should invoke it by passing in the next callback.

Obviously this is a super simplified example, and doesn't even come close to the functionality provided by the flow control libraries that are readily available. But hopefully its simple enough to give you a basic idea of how some of these modules go about solving the problem of taking asynchronous functions, and executing them in a synchronous, or serialized way.

For reference, here's the whole file... good luck and have fun!

- Your Doing It Wrong. Just learn how to deal with the asynchronous programming style.

- Use a module. Step.js, asynch.js, or any number of other ones.

Recently I wanted to create a little node script that would populate some tables in a database. I had a bunch of methods to do this, but they were all asynchronous functions and I needed to call them serially. For example, the first one would create a table. The second would populate some data in the table. And so on. Since my use case was fairly simple, and I didn't really want to download and start blindly using a flow control library to solve my problem, I decided to take it as an opportunity to see if I could create my own solution for serializing the function calls.

The resulting code is below. It's not especially pretty, or flexible. But after working it out, I felt that I did understand the general approach to serialization / synchronization in NodeJS a lot better. Hopefully looking at this simple example will shed some light on this for you as well.

To start with, I imported my init module. The functions I wanted to call synchronously are all exported by this module. I put these functions in an Array like this (note that I'm not actually invoking the functions here, just putting references to them in the array):

The next thing I did was create an array called "callbacks":

The idea is to create a bunch of special callbacks that will be invoked after each of the "steps" above. A callback function normally has parameters like (err, result), and a body that handles these. In addition to handling the err and result, however, when my callbacks were invoked I needed my callbacks to call the next step in the list. You can picture it something like this:

+--------+ +------+

| func_1 |--------->| cb_1 |

+--------+ +------+

|

+--------+ |

| func_2 |<------------+

+--------+

| +------+

+-------------->| cb_2 |

+------+

etc.

Ok, bad ascii art, but hopefully you get the idea. Function 1 gets called, finishes, and callback 1 is invoked. Callback 1 does the usual err / result handling and then invokes Function 2. Function 2 finishes and callback 2 is invoked. It does the usual err / result handling and then invokes Function 3, and so on.

For each of my "steps", I need to create one of these smart callbacks that knows the next function to call. I do that in a for loop, creating a custom callback for each function in the list of "steps".

Once the customized callbacks are all created, I start off the chain of events by calling the first function and passing in the first custom callback, like tipping the first domino in a row.

The part where we create the custom callbacks is the most interesting. Its like a little factory that creates callback functions. The createCallback function creates custom callbacks and returns them. The idea of a function returning another function is a little odd, if you're new to functional programming. But in JavaScript, a function can be passed around like any other variable. And here we are creating custom callback functions that know the next function in the list of steps. They also know that - if there is a next function to call - they should invoke it by passing in the next callback.

Obviously this is a super simplified example, and doesn't even come close to the functionality provided by the flow control libraries that are readily available. But hopefully its simple enough to give you a basic idea of how some of these modules go about solving the problem of taking asynchronous functions, and executing them in a synchronous, or serialized way.

For reference, here's the whole file... good luck and have fun!

Thursday, May 31, 2012

Wednesday, May 23, 2012

A Simple Introduction to Behavior Driven Development in NodeJS with Mocha

I recently had a chance to use the Mocha test framework for NodeJS on a large-ish project and wanted to provide a really simple introduction to it. Mocha is a unit testing framework for NodeJS apps from TJ Holowaychuk. As the successor to the popular Expresso test framework, it adds a number of new features. It supports both Test-Driven and Behavior-Driven development styles and can report test results in a variety of formats.

Mocha can be installed easily using npm. Assuming you have node and npm installed, you can install mocha as a global module using the -g flag to it will be available everywhere on the system.

or perhaps

or, if you prefer a local install instead of a global install

npm install mocha

export PATH=./node_modules/.bin:$PATH

You're also going to want should.js, which you can install in your local project folder.

Mocha, in addition to being a test framework, is a command-line utility that you run when you want to test things. You should be able to type `mocha -h` on the command line now and get some usage info.

Before we can use mocha to test stuff we should have a little bit of code to test. I'm going to borrow from Attila Domokos' blog http://www.adomokos.com/2012/01/javascript-testing-with-mocha.html but try to simplify it just a bit. We're going to create two files in two different directories:

dog.js is our source code that we want to test. Normally this would be a module that you have written. Since this is a super-simple introduction, it just exports one function called "speak". We can use this to invoke the "speak" method, which will return "woof!".

Now we can write something to test our code: dog_test.js. Remember this file goes under the test/ directory and dog.js is under the src/ directory. So here is how we would write a test case for Dog.

var dog = require(__dirname + "/../src/dog");

describe('dog', function() {

it('should be able to speak', function() {

var doggysound = dog.speak();

doggysound.should.equal('woof!');

});

});

Now there should be a src/ and a test/ directory with these two files in them.

src/dog.js

test/dog_test.js

Mocha will automatically run every .js file under the test/ directory. To run the tests you can simple type 'mocha' on the command line:

and it should give you the following output:

or if you want a little more detail use:

and it should give you this output:

✓ Dog should be able to speak: 0ms

✔ 1 test complete (2ms)

Mocha can generate several different styles of test reports. To see a list of the types of reports it can generate, type mocha --reporters.

Let's take a quick look at the test case definition. A group of tests starts with "describe". You provide some title for the thing you're testing. You can choose whatever title you want, but it should be descriptive. Our test suite is called 'dog'.

describe("dog", function () {

// your test cases go in here

}

You can describe more than one group of tests in the file.

describe("dog", function () {

// your doggy tests

}

describe("puppy", function () {

// your puppy tests

}

Test descriptions can be nested, which is often convenient.

describe("dog", function () {

describe("old tricks", function () {

// your old doggy tricks

}

describe("new tricks", function () {

// your new doggy tricks

}

}

Individual tests themselves start out like "it('should do such-and-so...". You can give the test whatever description you want, but generally you give it a brief explanation of what it should or shouldn't do. If you added more methods to Dog, you would add more tests within the "Dog" test description to test them.

describe("dog", function () {

it('should be able to speak', function () {

// the body of the speak test goes here

}

it('should be able to wag its tail', function () {

// the body of the wag test goes here

}

}

Our examples use should.js for writing test cases in Behavior Driven Development style. Should.js provides a bunch of cool assertions like foo.should.be.above(10) or baz.should.include('bar'), and so on. Check out the documentation for should.js here: https://github.com/visionmedia/should.js

it('should be able to speak', function () {

var doggysound = dog.speak();

doggysound.should.equal('woof!');

}

it('should be able to wag its tail', function () {

var wags = dog.wag(5);

wags.should.be.above(4)

}

These examples show an asynchronous testing approach. It's quite easy to run your tests in a synchronous way, though, by using the "done" callback which Mocha provides.

it('should be able to speak', function (done) {

var doggysound = dog.speak();

doggysound.should.equal('woof!');

done();

}

Using the "done" callback means the test will complete before moving on to the next one.

For a slightly more advanced tutorial check out http://dailyjs.com/2011/12/08/mocha/. It has some great tips about setting up a package.json and Makefile to help with test automation, as well as writing synchronous and asynchronous tests.

Mocha can be installed easily using npm. Assuming you have node and npm installed, you can install mocha as a global module using the -g flag to it will be available everywhere on the system.

npm -g install mocha

or perhaps

sudo npm -g install mocha

or, if you prefer a local install instead of a global install

npm install mocha

export PATH=./node_modules/.bin:$PATH

You're also going to want should.js, which you can install in your local project folder.

npm install should

Mocha, in addition to being a test framework, is a command-line utility that you run when you want to test things. You should be able to type `mocha -h` on the command line now and get some usage info.

Example

src/dog.js

test/dog_test.js

dog.js is our source code that we want to test. Normally this would be a module that you have written. Since this is a super-simple introduction, it just exports one function called "speak". We can use this to invoke the "speak" method, which will return "woof!".

// dog.js

function speak() {

return 'woof!';

}

exports.speak = speak;

function speak() {

return 'woof!';

}

exports.speak = speak;

Now we can write something to test our code: dog_test.js. Remember this file goes under the test/ directory and dog.js is under the src/ directory. So here is how we would write a test case for Dog.

// dog_test.js

var should = require('should'); var dog = require(__dirname + "/../src/dog");

describe('dog', function() {

it('should be able to speak', function() {

var doggysound = dog.speak();

doggysound.should.equal('woof!');

});

});

Now there should be a src/ and a test/ directory with these two files in them.

src/dog.js

test/dog_test.js

Mocha will automatically run every .js file under the test/ directory. To run the tests you can simple type 'mocha' on the command line:

mocha

and it should give you the following output:

✔ 1 test complete (3ms)

or if you want a little more detail use:

mocha -R list

and it should give you this output:

✓ Dog should be able to speak: 0ms

✔ 1 test complete (2ms)

Mocha can generate several different styles of test reports. To see a list of the types of reports it can generate, type mocha --reporters.

Explanation

Let's take a quick look at the test case definition. A group of tests starts with "describe". You provide some title for the thing you're testing. You can choose whatever title you want, but it should be descriptive. Our test suite is called 'dog'.

describe("dog", function () {

// your test cases go in here

}

You can describe more than one group of tests in the file.

describe("dog", function () {

// your doggy tests

}

describe("puppy", function () {

// your puppy tests

}

Test descriptions can be nested, which is often convenient.

describe("dog", function () {

describe("old tricks", function () {

// your old doggy tricks

}

describe("new tricks", function () {

// your new doggy tricks

}

}

Individual tests themselves start out like "it('should do such-and-so...". You can give the test whatever description you want, but generally you give it a brief explanation of what it should or shouldn't do. If you added more methods to Dog, you would add more tests within the "Dog" test description to test them.

describe("dog", function () {

it('should be able to speak', function () {

// the body of the speak test goes here

}

it('should be able to wag its tail', function () {

// the body of the wag test goes here

}

}

Our examples use should.js for writing test cases in Behavior Driven Development style. Should.js provides a bunch of cool assertions like foo.should.be.above(10) or baz.should.include('bar'), and so on. Check out the documentation for should.js here: https://github.com/visionmedia/should.js

it('should be able to speak', function () {

var doggysound = dog.speak();

doggysound.should.equal('woof!');

}

it('should be able to wag its tail', function () {

var wags = dog.wag(5);

wags.should.be.above(4)

}

Synch and Asynch Tests

These examples show an asynchronous testing approach. It's quite easy to run your tests in a synchronous way, though, by using the "done" callback which Mocha provides.

it('should be able to speak', function (done) {

var doggysound = dog.speak();

doggysound.should.equal('woof!');

done();

}

Using the "done" callback means the test will complete before moving on to the next one.

For a slightly more advanced tutorial check out http://dailyjs.com/2011/12/08/mocha/. It has some great tips about setting up a package.json and Makefile to help with test automation, as well as writing synchronous and asynchronous tests.

Thursday, April 12, 2012

Ottawa Javascript Rolls Again

The Ottawa Javascript group emerged from hibernation to hold our first meetup of the year at TheCodeFactory on April 11th. Here's a followup that I posted to the Ottawa Javascript Google group.

Thanks to Jon Abrams and Simon Kaegi who stepped in last night with a couple of impromptu demo's. Ben also sends his apologies for not being able to make it. I know lots of people were looking forward to the WebGL / Javascript demo so hopefully we will get him to come next time and demo something.

First of all Jon put together a great little web application that you can check out at http://wiqr.herokuapp.com/

Jon went into fairly low-level detail that provided some interesting points of discussion. One particularly good point was the use of environment variables to hold things that you don't want to (or shouldn't) hard-code, like database passwords or keys.

For newcomers it's easy to feel inundated by all the unfamiliar pieces when first looking at a node application. I recommend for people interested in the Express framework, check out the screencasts at http://expressjs.com/screencasts.html

For people who want a dead-simple introduction to node, I have a little blog post here that might be useful. http://51elliot.blogspot.ca/2011/07/simple-intro-to-nodejs-modules.html

Redis is a very popular database that's becoming a favorite among the NodeJS crowd. For a good introduction to it, check out http://try.redis-db.com

Finally Simon Kaegi's demo of the Orion development environment was a great example of how advanced web-based applications are becoming. If you missed it you can check out the Orion project here: http://www.eclipse.org/orion/

Dan L, Owain and Simon K volunteered to help with the planning and organization so the next meetup should be a good one. If you're interested in presenting something please drop one of us a line.

Some ideas are:

minutes at the end for questions. Feel free to make suggestions for future meetups, too.

Thanks again everyone.

Thanks to Jon Abrams and Simon Kaegi who stepped in last night with a couple of impromptu demo's. Ben also sends his apologies for not being able to make it. I know lots of people were looking forward to the WebGL / Javascript demo so hopefully we will get him to come next time and demo something.

First of all Jon put together a great little web application that you can check out at http://wiqr.herokuapp.com/

Jon went into fairly low-level detail that provided some interesting points of discussion. One particularly good point was the use of environment variables to hold things that you don't want to (or shouldn't) hard-code, like database passwords or keys.

For newcomers it's easy to feel inundated by all the unfamiliar pieces when first looking at a node application. I recommend for people interested in the Express framework, check out the screencasts at http://expressjs.com/screencasts.html

For people who want a dead-simple introduction to node, I have a little blog post here that might be useful. http://51elliot.blogspot.ca/2011/07/simple-intro-to-nodejs-modules.html

Redis is a very popular database that's becoming a favorite among the NodeJS crowd. For a good introduction to it, check out http://try.redis-db.com

Finally Simon Kaegi's demo of the Orion development environment was a great example of how advanced web-based applications are becoming. If you missed it you can check out the Orion project here: http://www.eclipse.org/orion/

Dan L, Owain and Simon K volunteered to help with the planning and organization so the next meetup should be a good one. If you're interested in presenting something please drop one of us a line.

Some ideas are:

- walk through the "try mongo", "try redis" or similar tutorial as a group, taking questions as we go

- show off a cool web application or technology that you found interesting and have a discussion around it

- demo your weekend project to a friendly group and get some feedback

minutes at the end for questions. Feel free to make suggestions for future meetups, too.

Thanks again everyone.

Sunday, March 18, 2012

Wednesday, January 25, 2012

Simple Intro to NodeJS Module Scope

Update: Here are slides for this talk at OttawaJS: "Node.JS Module Patterns Using Simple Examples".

Update 2: More Node.JS Module Patterns - still fairly basic but more practical examples.

People often ask about scope and visibility in Node.JS modules. What's visible outside the module versus local to the module? How can you write a module that automatically exports an object? And so on.

Here are six examples showing different ways of defining things in Node.JS modules. Not all of these are recommended but they will help explain how modules and their exports work, intuitively.

Here is an app that uses it.

Here is an app that uses it.

Here is an app that uses it.

Here is an app that uses it.

Here is an app that uses it.

Here is an app that uses it.

Update 2: More Node.JS Module Patterns - still fairly basic but more practical examples.

People often ask about scope and visibility in Node.JS modules. What's visible outside the module versus local to the module? How can you write a module that automatically exports an object? And so on.

Here are six examples showing different ways of defining things in Node.JS modules. Not all of these are recommended but they will help explain how modules and their exports work, intuitively.

Exporting a global function

This module defines a global function called foo. By not putting var in front of the declaration of foo, foo is global. This is really not recommended. You should avoid polluting the global namespace.// foo.js

foo = function () {

console.log("foo!");

}

Here is an app that uses it.

// Module supplies global function foo()

require('./foo');

foo();

Exporting an anonymous function

This module exports an anonymous function.// bar.js

module.exports = function() {

console.log("bar!");

}

Here is an app that uses it.

// Module exports anonymous function

var bar = require('./bar');

bar();

Exporting a named function

This exports a function called fiz.// fiz.js

exports.fiz = function() {

console.log("fiz!");

}

Here is an app that uses it.

// Module exports function fiz()

var fiz = require('./fiz').fiz;

fiz();

Exporting an anonymous object

This exports an anonymous object.// buz.js

var Buz = function() {};

Buz.prototype.log = function () {

console.log("buz!");

};

module.exports = new Buz();

Here is an app that uses it.

// Module exports anonymous object

var buz = require('./buz');

buz.log();

Exporting a named object

This exports an object called Baz.// baz.js

var Baz = function() {};

Baz.prototype.log = function () {

console.log("baz!");

};

exports.Baz = new Baz();

Here is an app that uses it.

// Module exports object Baz

var baz = require('./baz').Baz;

baz.log();

Exporting an object prototype

This exports a prototype called Qux.// qux.js

var Qux = function() {};

Qux.prototype.log = function () {

console.log("qux!");

};

exports.Qux = Qux;

Here is an app that uses it.

// Module exports a prototype Qux

var Qux = require('./qux').Qux;

var qux = new Qux();

qux.log();

Thursday, January 5, 2012

The Global Power Shift

Paddy Ashdown's fantastic speech at TEDx Brussels, which was published today. This is well worth watching for anyone interested in what is happening in global power structures and the end of the American century. Absolutely brilliant talk, at once troubling and still inspirational.

Subscribe to:

Comments (Atom)

Productivity and Note-taking

I told a friend of mine that I wasn't really happy with the amount of time that gets taken up by Slack and "communication and sched...

-

tldr; https://github.com/73rhodes/sideflow This extension provides goto, gotoIf and while loop functionality in Selenium IDE. Selenium ...

-

Update : Here are slides for this talk at OttawaJS: " Node.JS Module Patterns Using Simple Examples ". Update 2 : More Node.JS M...

-

This post is a continuation of REST API Best Practices 2: HTTP and CRUD , and deals with the question of partial updates. REST purists ins...

.jpg)

.jpg)